Background:

A while ago, I stumbled upon two blogs about speed and PowerShell. First Guillaume Bordier’s blog about PowerShell and writing files. And second there was IT Idea’s Blog on PowerShell Foreach vs ForEach-Object. While researching the matter, I also came across an article on PowerShell.com about speeding up loops (which wasn’t of use in my tests, but still interesting and something to think about when creating a for loop).

For the first blog: I saw a flaw in the export-csv he used, as the output of the file was not the string “some text” and I myself use .net File class WriteAllLines a lot, which was not in his list.

Thus I decided to create my own test and try out the information found in both of the above blogs. Things got out of hand a little bit, which made me end up with the tool I have today and which I share with you. This way you can test them yourself or use my script as something to learn from. By creating this script I myself learnt a lot of things as well and used techniques which I hadn’t used before. Let me take you on the journey (if you’re not interested in the story and results, scroll down to the end of the post for the script):

Test Goals:

- I want the output to be the same and least interrupted by having the need of using variables next to writing and looping; but if they are needed, their time will be measured as well. (thus: file contents needs to be the same, for as far possible)

- I want to test the speed of each file write type

- I want to test the speed of each each loop

- In case of export-csv, I want the output to be the same text as in each other file, but it’s allowed to have quotes around it, because that’s part of the csv format.

- I will use the default setting for each way to write to a file, I will just deliver a string/array (depending on the way to write) and a file name and let the command do its work.

The different ways to write to a file, which are used in the tests (minor number in the test files):

- export-csv -append

- >> output

- out-file

- out-file -append

- io file WriteAllLines (.net file class)

- .net streamwriter WriteLine (.net streamwriter class)

- io file WriteLine (.net file class)

The different loops, which are used in the tests (major number in the test files):

- .5000 | ForEach-Object

- Foreach 1..5000

- For x = 1 – 5000

- While x -ne 5000

- Do .. While x -ne 5000

- ForEach(x in [arr5000])

(I know there’s also a Do Until, but I figured there’s already a While and a Do.. While in here, so it probably wouldn’t matter that much; maybe I will add it later. If I missed some more, please tell me in the comments and I will try to add it.)

Like I said before, once I started testing things, it got a little bit out of hand and I added more and more, also because the results were pretty interesting, but primarily because I enjoyed testing and learning about all the differences and new ways of doing things in my code.

The test results

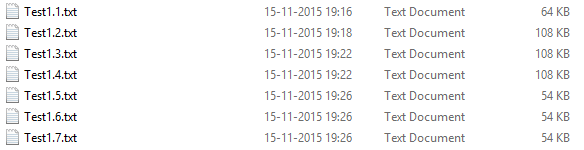

The first thing to notice when the script is complete is that the different write types give different file sizes. With the csv export is easily explainable, because of the quotes around each text line. (64kb instead of 54kb)

When you open them with my favorite text editor (Notepad++), it’ll tell you the different types of encoding that was used.

UTF-8 is used by Export-CSV, the .net file WriteAllLines & WriteLine and .net streamwriter WriteLine

The others use UCS-2 LE BOM; I did not research the difference, but they probably support double byte characters, thus explaining the double file size.

(* Note – in most or all cases the file format can be specified, but I was interested in the default setting, as this would be the one used the most when I’ll use these commands)

After I saw these differences, I also noticed the time differences, which I color coded so it’s easier to find the best and worst ones. In the end of the tool it’ll show 2 views on the results. The results based on the types of loop and on the types of writing to the files.

In the end I limited the file to create test files with a maximum 50 million lines (which is a 524MB UTF-8 file), but you can easily override that by commenting it out.

The slow writing types and loops will in most cases be exponentially slower when using larger files, but testing with a minimum of 5000 lines will give a decent result. It is possible to go as low as 50 lines though, but the quick writing types will be done witting 0 milliseconds. Even with only 50 lines to write, some of the ways will result in taking nearly 1 second, thus already lagging your script if you use them.

All test results show that the ForEach-Object is one of the slowest loops. The quickest seems to be the ForEach loop, but Do.. While, While and For follow with just several milliseconds difference. It would be quicker to create a list of objects which you’d otherwise ran through ForEach-Object and create a ForEach loop to work with that list of objects. This would probably be about 30 seconds quicker with a loop that runs 5000 times. One little interesting thing is that the Do .. While loop is a little bit quicker than the While loop (maybe because the Do already initiates the start of the loop, without knowing the conditions?), that started me wondering about the Do .. Until loop and will probably add that to the types of loop to test. But first I wanted to share my findings and script with you.

On the writing part there’s a lot of differences to see.

The .net File class WriteAllLines (which I thought was using streamwriter, but it is filestream) is performing well with small files, but once they get bigger, it is easily outperformed by the .net File class WriteLine and .net Streamwriter. Those two are the quickest, with the io file WriteLine leading on top on all tests and loops.

The test results In screenshots:

(The first one is a screenshot of test results as put into an Excel sheet and comparing 3 test runs; the second one is a screenshot of the results of the script with its default parameters, thus all tests and 5000 lines per file; the third one is a screenshot of the results of the script with 50 million lines (524MB UTF-8 file) written and only the quickest 2 writing methods. The red line (which is marked worst) is actually very good, but since it’s the slowest in the list, it gets this marking)

I’m pretty impressed by the results as well; writing 524MB, line by line in 10.4 seconds.

My conclusion:

Only use the Foreach-Object if you really really really (yes, I just did repeat the word really 3 times) need to; because it’s very bad for the performance of your script. If it’s at all possible, just create a ForEach loop and you will spend a lot less time waiting on your scripts to complete. (Which makes you and others around you that use your scripts happy). There are some valid reasons to do so, as described on the IT Idea blog (mentioned in the top of the article), to which Jeffery Hicks also replied with a valid reason to use foreach, though his example may be lacking a bit.

If you were considering of using export-csv , >>, out-file append or out-file; they all take 1 second up to 40 seconds for a 5000 lines file (54kb in UTF-8); so I would recommend no to use those. In case of getting data to a CSV file, you can always use ConvertTo-Csv and then use any of the other writing methods to save your data. Or if you’re into quick and dirty solutions, just put quotes around the strings you save and then use any of the quick writing methods to save this to a file.

If you were considering and/or using .net File class WriteAllLines (like I am/was): you’re already on the right track, and it is a quick way to save your data, but move on to one of the two quickest ones on the block:

- .net File WriteLine

- .net StreamWriter WriteLine

Which one of those to use? I’d say get my test script and test it yourself. On my dekstop and laptop computer I got different time results, but the winner (by a hair) was the .net File class with WriteLine.

So I know that from now on I’ll be using that one a lot more, in combination with a ForEach or For loop; which both perform the best.

What I learned by creating this

On the learning part for me (when creating this script), I started learning about using dynamic script block names, putting a console cursor on a certain place (doesn’t work on PowerShell 2.0 by the way), formatting strings, dynamic variables, how to properly write my text with export-csv (which I’ll never use again) and probably more.

I was confused on the .net file class, hence it uses filestream I had assumed it is the same type of steam as streamwriter; but the test results showed me something different in timing, so I also learned that there’s a difference between filestream and streamwriter.

Nice piece of code

I believe this to be a very nice line in the script (as it calls all the different tests and loops) :

Invoke-Command -ScriptBlock (Get-Variable -Name "$TestName" -ValueOnly) -ArgumentList $s,$TestName,$TestNumber,$TotalTestText

If you believe there’s anything missing in my tests, or things can be done on a better and/or different way, let me know below in the comments.

Optional parameters:

- LoopStartTest (default value = 1; run all tests). If you want only the quick loops, start from 2

- TestLines (default value = 5000; max = 50000000; 50 million). The amount of lines to write to each test file. Going below 1000 will result in writing methods completing within 0ms

- WriteStartTest (default value = 1; run all tests). If you want only the quickest 2 writers, set the value to 6, if you want to include WriteAllLines set it to 5 (which can also be pretty quick, depending on amount of lines and file size)

Once you set the testlines value above 1 million, it is advised to only start from writing method 5; and loop test 2. Though I am interested in your results. Do they compare to mine? If you do have the time and patience to wait for the slow ones on files above 100k lines, please let me know the results below in the comments. I don’t thing the color coding will still be any good, though I tried to tie a formula to it to try to keep it within acceptable ranges. If you’ve got the patience to run this script to create larger files than 50 million lines (524MB in UTF-8 encoding)

The test script can be found here: Test-LoopsAndWriteFile.ps1

Note for PowerShell 2.0 users: The Append parameter is not implemented for Export-Csv, thus this test is skipped if PowerShell 2.0 is detected.

I am suffering with a complex problem in that I need to read 300MB files, check the line, and data and then write that data back to another file. I’ve not found an approach that doesn’t take forever, almost literally.

Would you be able to provide some guidance on an approach that I can investigate?

I cannot download the powershell in this post due to corporate restrictions on downloads

LikeLike

Hi, like I say in the article, these 2 are the quickest, they also have a readline version, which works very quick:

.net File WriteLine

.net StreamWriter WriteLine

Happy scripting.

LikeLike